Introduction

In today’s rapidly evolving digital landscape, the need to safeguard personal data has never been more critical. Data privacy concerns have dominated headlines with increasing frequency, fueled by high-profile data breaches, privacy scandals, and the growing awareness of how much personal information is being collected and shared online. As we march toward a more data-driven world, the importance of protecting individual privacy while still allowing businesses and organizations to harness the power of data has become a balancing act.

Among the many privacy-preserving techniques, differential privacy has emerged as a key concept in ensuring that data remains both useful and secure. With increasing reliance on technologies such as artificial intelligence (AI), machine learning (ML), and big data analytics, ensuring data privacy is becoming more complex. However, differential privacy offers a unique solution to this challenge. In this blog, we will explore differential privacy in detail, its mechanisms, applications, and future trends that will shape data privacy strategies in the coming years.

What is Differential Privacy?

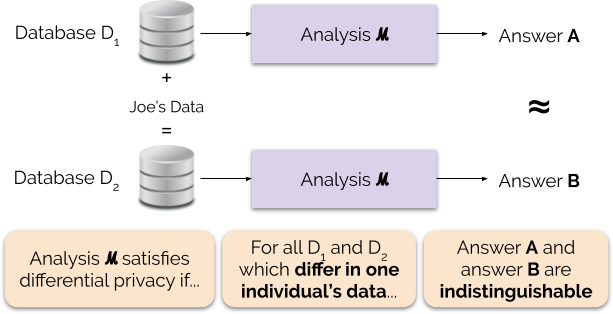

Differential privacy is a robust mathematical framework designed to protect the privacy of individuals within a dataset while still allowing the dataset to be analyzed for useful insights. At its core, the goal of differential privacy is to ensure that the removal or addition of a single data point does not

significantly affect the outcome of any analysis, making it difficult to trace any results back to an individual in the dataset.

Core Concepts of Differential Privacy

- Privacy Budget: Differential privacy operates on a concept called the “privacy budget,” often referred to as the epsilon (ε) parameter. The privacy budget determines the level of noise that is added to the data to protect privacy. A smaller epsilon means stronger privacy protection but at the cost of less accurate data analysis, whereas a larger epsilon allows for more accurate results but weaker privacy protection.

- Noise Injection: To preserve privacy, differential privacy works by adding noise to the data or to the query results. This noise is designed in such a way that it doesn’t reveal any specific individual’s data but allows for meaningful aggregate insights to be drawn.

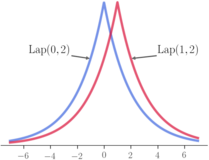

- Randomized Algorithms: The mechanisms behind differential privacy are often based on randomized These algorithms modify the original data in a controlled manner by introducing randomness (often through techniques like the Laplace or Gaussian noise distributions). This randomness ensures that the presence or absence of a single individual’s data will have little to no impact on the overall analysis.

Mechanisms for Achieving Differential Privacy

Differential privacy is achieved through different mechanisms, primarily focusing on adding noise to the data in a way that the statistical queries performed on the data remain useful but still provide privacy protection. The most common mechanisms include:

- Laplace Mechanism: The Laplace mechanism adds noise that follows a Laplace distribution, which is centered around zero and has a “fatter tail” than the normal distribution. This mechanism is particularly useful for numerical data and helps preserve privacy by ensuring that the effect of any single data point is minimized.

- Gaussian Mechanism: The Gaussian mechanism introduces noise from a normal (Gaussian) distribution. It is more commonly used when the data or queries are sensitive and require stronger privacy guarantees. This mechanism can be tuned to provide different levels of privacy depending on the dataset and the application.

- Exponential Mechanism: This mechanism is used for non-numeric data, such as categorical or qualitative information. It provides a way to select outputs that are consistent with the privacy guarantees, ensuring that even when categorical data is queried, privacy is still preserved.

- Shuffling and Randomized Response: These mechanisms involve grouping data points and ensuring that they are randomly shufled or altered in such a way that individual data points remain private. They are often used in social science research or situations where survey data needs to be anonymized.

Real-World Applications of Differential Privacy

Differential privacy is already being applied in a variety of industries to ensure that data can be used for analysis without compromising individual privacy. Below are some notable real-world

applications:

- Google’s Browsing History: In 2014, Google introduced Randomized Aggregatable Privacy- Preserving Ordinal Response (RAPPOR) to Chrome, which allowed the company to collect data about users’ browsing habits while protecting individual The technique applies differential privacy to collect probabilistic information, making it difficult to trace the data back to specific users. Google open-sourced its differential privacy technology in 2019, allowing other companies to implement similar measures in their own applications.

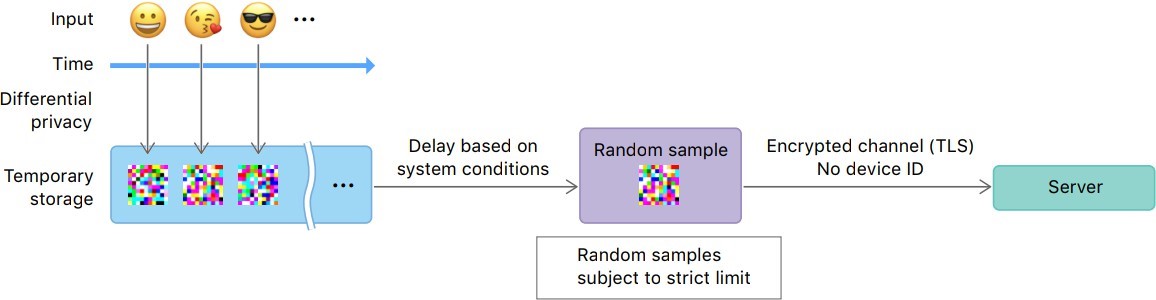

- Apple’s App Usage Data: Apple has been using differential privacy for several years to analyze user behavior in a privacy-preserving manner. For example, Apple collects data about emoji usage, search queries, and app usage patterns, but it adds noise to individual data points to ensure privacy before analyzing the data. This is an example of local differential privacy, where the noise is added to the data before it is sent to Apple’s servers.

- Healthcare Advertising: In the healthcare industry, differential privacy enables advertisers to target specific demographics for advertising campaigns without relying on personally identifiable information. By using aggregated data, healthcare advertisers can create lookalike models to identify and target individuals who fit a certain profile, all while ensuring that no individual’s data is exposed.

- S. Census Bureau: In 2020, the U.S. Census Bureau implemented differential privacy techniques to protect the identities of individuals within the census dataset. By injecting noise into the dataset, the Bureau was able to prevent any attempt to re-identify individuals from the aggregated census data, ensuring that privacy was maintained while still providing valuable insights for policymakers and researchers.

The Future of Differential Privacy: Trends and Predictions for 2025

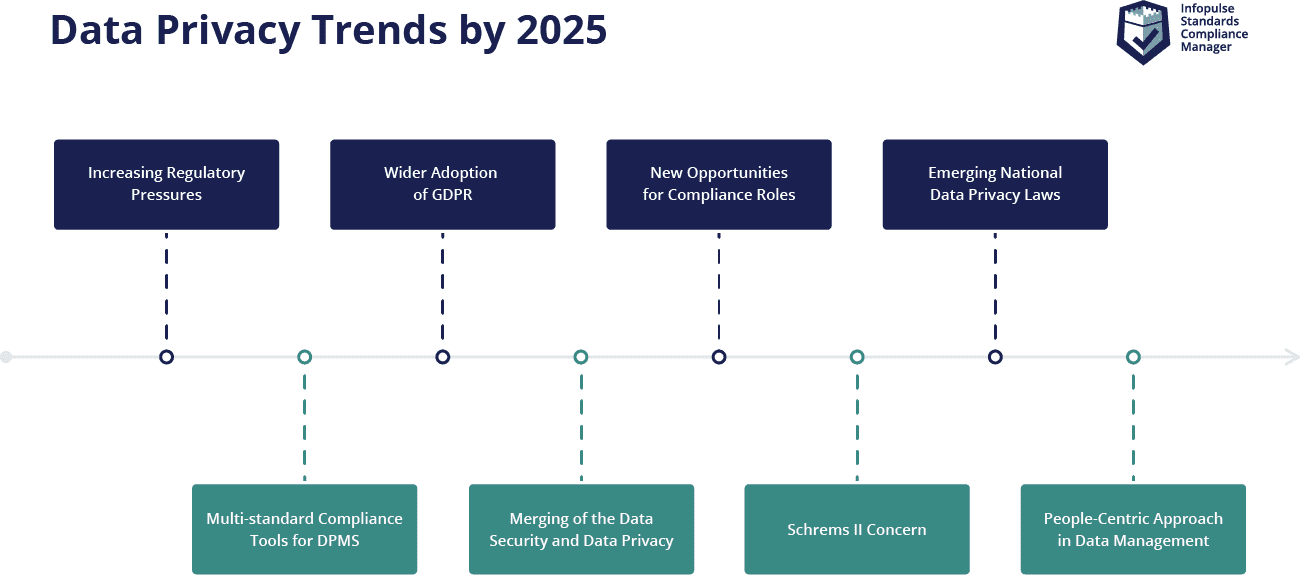

As we look ahead to 2025, several key trends are shaping the future of differential privacy and data privacy in general. These trends point to a future where privacy-enhancing technologies (PETs) will become essential components of data protection strategies across industries.

- Rise of Privacy-Enhancing Technologies (PETs): As organizations strive to balance innovation and data protection, technologies such as homomorphic encryption, federated learning, and differential privacy are gaining traction. These technologies enable organizations to derive insights from data without compromising privacy, making them indispensable tools in the evolving data privacy landscape.

- Blockchain for Data Privacy: Blockchain technology, known for its decentralized and secure nature, is expected to play a pivotal role in securing personal data. By offering transparency, immutability, and control over data access, blockchain provides an additional layer of security. In 2025, we are likely to see more industries adopting blockchain solutions to enhance data privacy, especially in sectors like finance and healthcare.

- AI and Machine Learning in Data Privacy: AI and ML are increasingly becoming integral to data privacy These technologies will be used to predict and detect privacy threats, monitor suspicious activities, and enforce privacy policies. With AI’s ability to analyze vast amounts of data in real-time, it will become a key asset in identifying privacy risks and ensuring compliance with privacy regulations.

- Global Data Protection Regulations: As data privacy concerns continue to escalate, countries around the world are implementing and refining regulations such as the GDPR in the EU and the CCPA in California. In 2025, organizations will need to navigate an increasingly complex regulatory landscape, ensuring compliance with both local and global privacy laws.

- Focus on User Consent and Control: As users become more aware of their privacy rights, there will be a greater emphasis on providing users with control over their personal Expect to see the rise of privacy-centric features, such as granular consent options, user- friendly privacy settings, and increased transparency in how data is collected and used.

- Cybersecurity Measures for Data Protection: With the increasing sophistication of cyber threats, robust cybersecurity measures will be essential for safeguarding data privacy. Companies will need to integrate advanced threat detection systems, multi-factor authentication, and end-to-end encryption to protect sensitive data from cyber-attacks.

Challenges and Limitations of Differential Privacy

Despite its promising potential, implementing differential privacy comes with its own set of challenges and limitations:

- Data Utility vs. Privacy: One of the key challenges of differential privacy is finding the right balance between data utility and While adding noise to data ensures privacy, it can also reduce the accuracy of the insights derived from that data. Striking a balance that ensures both privacy and usefulness is critical.

- Computational Complexity: Implementing differential privacy, especially for large-scale datasets, can be computationally Adding noise to each data point or query result requires significant computational resources, which may not be feasible for all organizations.

- Complexity in Implementation: Differential privacy is a complex field that requires a deep understanding of statistical mechanisms and For organizations that are new to privacy-preserving techniques, implementing differential privacy can be a daunting task.

- User Trust: While differential privacy helps protect individual privacy, users need to trust that their data is being handled correctly. Clear communication and transparency about how differential privacy is being used are essential for building user trust.

Conclusion

Differential privacy is rapidly becoming an essential tool for businesses and organizations that aim to safeguard user privacy while still extracting valuable insights from data. With increasing adoption of privacy-enhancing technologies, the rise of blockchain, and the evolving regulatory landscape, the future of data privacy is poised for significant transformation. However, as the use of differential privacy becomes more widespread, organizations must navigate challenges related to data utility, computational complexity, and implementation to maximize the effectiveness of these techniques. In 2025 and beyond, organizations that embrace differential privacy and prioritize user privacy will be better positioned to build trust, comply with data protection laws, and unlock the full potential of their data-driven innovations.